Modify involved files

Hi everyone, now we will explain how to get our motd and PS1 modified to customise our linux terminal, We use Centos 7 for this post.

sorea@vmjump01 ~$ cat /etc/redhat-release

CentOS Linux release 7.2.1511 (Core)

Modifying motd

First we installed figlet by following the command below:

sorea@vmjump01 ~$ sudo yum install figlet

After the installation we executed next command and will have and output as shown below:

sorea@vmjump01 ~$ figlet all IT - Blog

Once we have our banner we can copy it and modify next file as we want

sorea@vmjump01 ~$ cat /etc/motd

e.g. Mine looks like this =D

Modifying .bashrc

To have our prompt like the one shown on the avobe picture (green, white, red and the mode it shows directories we are working in) we need to modify our .bashrc file located at our home, As we are going to customise our enviroment it will always located at ~/.bashrc

sorea@vmjump01 ~$ ls -la .bashrc

-rw-r--r--. 1 sorea sorea 840 Nov 6 06:51 .bashrc

Let's add below block of lines to our file

# Font color

red=$(tput setaf 1)

green=$(tput setaf 2)

yellow=$(tput setaf 3)

blue=$(tput setaf 4)

purple=$(tput setaf 5)

cyan=$(tput setaf 6)

gray=$(tput setaf 7)

redStrong=$(tput setaf 8)

white=$(tput setaf 9)

# Background color

blueB=$(tput setab 4)

grayB=$(tput setab 7)

# Backgrounf no color

sc=$(tput sgr0)

PS1='\[$green\]\u\[$white\]@\[$red\]\h \[$white\]$(IFS=/ d=($PWD); IFS=\n c=${#d[@]}; if [[ $PWD == $HOME ]]; then echo "~"; elif [ $c -gt 5 ]; then echo "/${d[1]}/${d[2]}/.../${d[$c-2]}/${d[$c-1]}"; else echo $PWD; fi)\[$white\]\$ \[$white\]'

Then we execute below command to refresh our enviroment's variables

sorea@vmjump01 ~$ source .bashrc

sorea@vmjump01 ~$

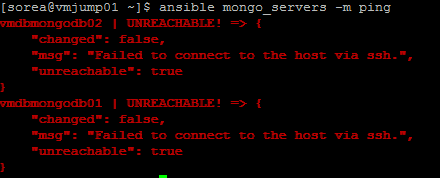

All the steps described above were for a single host, if we want to configure our enviroment in a amountof host we can do it using ansible.

Configure using ansible

The steps described before are needed to complete this activity since playbook takes motd to the remote server, Now let's create a tree as follows:

sorea@vmjump01 /home/sorea/.../ansible/customise_cli$ tree

.

├── customise.yml

├── files

│ └── motd

└── hosts

In our playbook we have changed a part of the last line due to {# #} is a jinja comment tag and it throws errors because comment tag is not close

From

c=${#d[@]};

to

c={{ '${#d[@]}' }};

sorea@vmjump01 /home/sorea/.../ansible/customise_cli$ tail -1 customise.yml

PS1='\[$green\]\u\[$white\]@\[$red\]\h \[$white\]$(IFS=/ d=($PWD); IFS=\n c={{ '${#d[@]}' }}; if [[ $PWD == $HOME ]]; then echo "~"; elif [ $c -gt 5 ]; then echo "/${d[1]}/${d[2]}/.../${d[$c-2]}/${d\[$c-1\]}"; else echo $PWD; fi)\[$white\]\$ \[$white\]'

Once all files are in place we can run our playbook by executing netx command to get these changes done

sorea@vmjump01 /home/sorea/.../ansible/customise_cli$ ansible-playbook customise.yml -i hosts -k

SSH password:

We will be propmted for a password then will see the progress on the screen, Watch this video for execution details

https://youtu.be/bztt1a0BEy8

https://youtu.be/bztt1a0BEy8

Playbook used are already on git: